SR-IOV (Single Root I/O Virtualization)

也是一个 PCIe 规范。

PCIE 里面有 Function 概念(见 BDF),SR-IOV 协议引入了两种类型功能的概念:PF^ 和 VF^。

HPC 场景下,一般来说:

- 机头网卡,会使用 SRIOV 能力,切换成多个 VF,每一个 VF 可以做成一个 eni 支持弹性网卡;

- 机尾 RDMA 网卡:一般不开启,直接整卡透传给 Guest 用;

- GPU 设备,一般不开启,直接整卡透传给 Guest 用。

可以把 PF 直通给 Guest 吗?

当开启 SRIOV 时,不可以,PF 只能让 host 使用,不能让 Guest 使用。当没有开启 SRIOV 时可以,因为 PF 就表示了整个设备。

Virtual Function (VF) / Physical Function (PF)

PF: 物理功能。这是管理整个物理设备的“主功能”,拥有完全配置和控制设备的能力。通常只有宿主机的驱动程序才能管理 PF。缺省情况下,SR-IOV 功能处于禁用状态,PF 充当传统 PCIe 设备。

VF: 虚拟功能。这是由 PF 衍生出来的轻量级 PCIe 功能,每个 VF 都可以直接分配给一个虚拟机,作为其独立的“虚拟硬件”。

Configuration space 在 PF 中。PF 是全功能的 PCIe 功能,可以像其他任何 PCIe 设备一样进行发现、管理和处理。PF 拥有完全配置资源,可以用于配置或控制 PCIe 设备。

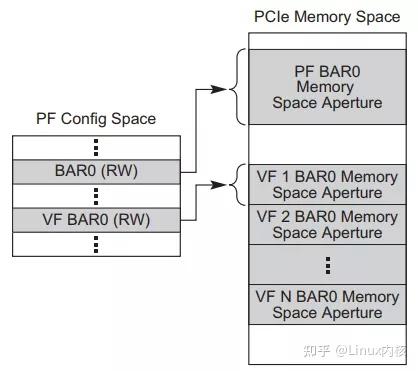

VF 的 BAR 空间是 PF 的 BAR 空间资源中规划的一部分,VF 不支持 IO 空间,所以 VF 的 BAR 空间也需要 MMIO 映射到系统内存。

root@p194-162-015:~# lspci | grep -i "nvidia"

05:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

06:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

07:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

08:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

18:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

38:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

48:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

59:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

98:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

b8:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

c8:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

d9:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

root@p194-162-015:~# lspci -k -s 18:00.0

18:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

Subsystem: NVIDIA Corporation Device 198b

Kernel driver in use: vfio-pci

可以看到使用这个设备的 driver 是 vfio-pci 而不是 nvidia 自己的 driver。如果我们在一台没有直通的机器上看,可以看到使用这台设备的 driver 是 nvidia 而不是 vfio-pci,这也就是为什么下面 How to use VFIO 中说的,在直通设备前需要先把设备从当前使用它的驱动解绑:

root@iv-ye419lfegw7fzxco08cz:~# lspci | grep -i "nvidia"

0d:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

40:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

41:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

42:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

43:00.0 Bridge: NVIDIA Corporation Device 22a3 (rev a1)

44:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

4d:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

58:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

90:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

c1:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

ca:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

d5:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

root@iv-ye419lfegw7fzxco08cz:~# lspci -k -s 44:00.0

44:00.0 3D controller: NVIDIA Corporation Device 2329 (rev a1)

Subsystem: NVIDIA Corporation Device 198b

Kernel driver in use: nvidia

Kernel modules: nvidia_drm, nvidia

创建出来 VF 之后,这些 VF 相当于成为了一个设备,可以通过 lspci 看到这些设备,比如这个通过 SRIOV 虚拟化出来 16 个 VF 的网卡,lspci 出来可以看到有 16 个 Virtual Functions:

root@p22-048-018:/sys/class/net# lspci | grep -i "mellanox"

01:00.0 Ethernet controller: Mellanox Technologies MT2910 Family [ConnectX-7]

01:00.1 Ethernet controller: Mellanox Technologies MT2910 Family [ConnectX-7]

01:00.2 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:00.3 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:00.4 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:00.5 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:00.6 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:00.7 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.0 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.1 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.2 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.3 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.4 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.5 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.6 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:01.7 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:02.0 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

01:02.1 Ethernet controller: Mellanox Technologies ConnectX Family mlx5Gen Virtual Function

0d:00.0 Ethernet controller: Mellanox Technologies MT43244 BlueField-3 integrated ConnectX-7 network controller (rev 01)

0d:00.1 DMA controller: Mellanox Technologies MT43244 BlueField-3 SoC Management Interface (rev 01)

54:00.0 Ethernet controller: Mellanox Technologies MT43244 BlueField-3 integrated ConnectX-7 network controller (rev 01)

54:00.1 DMA controller: Mellanox Technologies MT43244 BlueField-3 SoC Management Interface (rev 01)

8b:00.0 Ethernet controller: Mellanox Technologies MT43244 BlueField-3 integrated ConnectX-7 network controller (rev 01)

8b:00.1 DMA controller: Mellanox Technologies MT43244 BlueField-3 SoC Management Interface (rev 01)

d5:00.0 Ethernet controller: Mellanox Technologies MT43244 BlueField-3 integrated ConnectX-7 network controller (rev 01)

d5:00.1 DMA controller: Mellanox Technologies MT43244 BlueField-3 SoC Management Interface (rev 01)

不管是 VF 直通还是整个设备直通,即使直通进去之后宿主机上 lspci 还是能看到这个设备的,只不过是驱动变了。

VF 直通进去后,PF 只有管控功能吗,还是也和 VF 一样具有功能属性?

一般来说,PF 也是可以继续使用的。

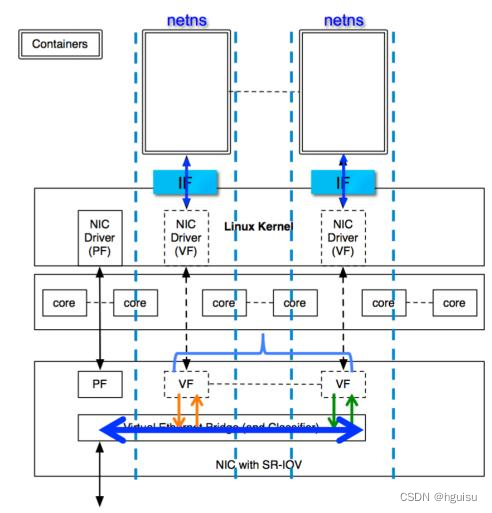

VF 可以选择不直通给 guest,host 留着自己用吗?

可以,比如留给容器用支持容器网络:

整卡透传给 Guest 后,Guest 可以使用 Host 没有使用的 SRIOV 能力吗?

没有了,在 guest 里可以看到(不知道有没有把 SRIOV Capability 透传进 guest 的能力):

root@iv-ye8tels3y8h9l3bnve4b:~# lspci -s 65:02.0 -vvv

65:02.0 VGA compatible controller: NVIDIA Corporation GB203 [GeForce RTX 5080] (rev a1) (prog-if 00 [VGA controller])

Subsystem: NVIDIA Corporation GB203 [GeForce RTX 5080]

Physical Slot: 2-12

Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 10

NUMA node: 0

Region 0: Memory at c0000000 (32-bit, non-prefetchable) [size=64M]

Region 1: Memory at 6fc00000000 (64-bit, prefetchable) [size=16G]

Region 3: Memory at 70012000000 (64-bit, prefetchable) [size=32M]

Region 5: I/O ports at 8080 [size=128]

Expansion ROM at bc100000 [virtual] [disabled] [size=512K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [48] MSI: Enable- Count=1/16 Maskable+ 64bit+

Address: 0000000000000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [60] Express (v2) Legacy Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s <64ns, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop- FLReset-

MaxPayload 256 bytes, MaxReadReq 4096 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 32GT/s, Width x16, ASPM L1, Exit Latency L1 unlimited

ClockPM+ Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 2.5GT/s (downgraded), Width x16

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range AB, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp+ 10BitTagReq+ OBFF Via message, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS-

AtomicOpsCap: 32bit+ 64bit+ 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- 10BitTagReq+ OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [9c] Vendor Specific Information: Len=14 <?>

Capabilities: [b0] MSI-X: Enable+ Count=9 Masked-

Vector table: BAR=0 offset=00b90000

PBA: BAR=0 offset=00ba0000

Capabilities: [c8] Vendor Specific Information: Len=08 <?>

Kernel driver in use: nvidia

Kernel modules: nvidia_drm, nvidia

SR-IOV Live Migration

PCIe 规范里是有 VF 支持 live migration 能力的,但是一般设备都没有实现,举个例子,看下面 Migration 相关的字段:

# 执行下面命令:

lspci -s 0000:01:00.1 -vvv

# 找到类似 Capabilities: [180 v1] Single Root I/O Virtualization (SR-IOV) 这一栏

Capabilities: [180 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy- 10BitTagReq-

IOVSta: Migration-

Initial VFs: 16, Total VFs: 16, Number of VFs: 0, Function Dependency Link: 01

VF offset: 17, stride: 1, Device ID: 101e

Supported Page Size: 000007ff, System Page Size: 00000001

Region 0: Memory at 00000600dc000000 (64-bit, prefetchable)

VF Migration: offset: 00000000, BIR: 0

SRIOV 实操

# 查看 SRIOV 设备支持的 VF 个数:

cat /sys/class/net/enp49s0f1/device/sriov_totalvfs

# 配置设备的 VF 个数:

echo '7' > /sys/class/net/enp49s0f1/device/sriov_numvfs

如何查看哪个 PCI 设备是 PF,哪个是 VF?

# VF 设备

root@p22-048-018:/sys/bus/pci/devices/0000:01:00.2# ls

ari_enabled d3cold_allowed iommu_group modalias reset subsystem

broken_parity_status device irq msi_bus reset_method subsystem_device

class dma_mask_bits link msi_irqs resource subsystem_vendor

config driver local_cpulist numa_node resource0 uevent

consistent_dma_mask_bits driver_override local_cpus physfn resource0_wc vendor

current_link_speed enable max_link_speed power revision

current_link_width iommu max_link_width power_state sriov_vf_msix_count

# PF 设备

root@p22-048-018:/sys/bus/pci/devices/0000:01:00.1# ls

aer_dev_correctable dma_mask_bits mlx5_core.ctl.1 ptp sriov_drivers_autoprobe

aer_dev_fatal driver mlx5_core.eth.1 real_miss sriov_numvfs

aer_dev_nonfatal driver_override mlx5_core.eth-rep.1 remove sriov_offset

ari_enabled enable mlx5_core.fwctl.1 rescan sriov_stride

broken_parity_status hwmon mlx5_num_vfs reset sriov_totalvfs

class iommu modalias reset_method sriov_vf_device

commands_cache iommu_group msi_bus resource sriov_vf_total_msix

config irq msi_irqs resource0 subsystem

consistent_dma_mask_bits link net resource0_wc subsystem_device

current_link_speed local_cpulist numa_node revision subsystem_vendor

current_link_width local_cpus pools roce_enable uevent

d3cold_allowed max_link_speed power rom vendor

device max_link_width power_state sriov vpd

可以看到,VF 的一般都有 physfn 目录(指向父亲 PF 的路径)表示这是一个 VF,而 PF 都有 sriov_totalvfs 表示这是一个 PF。

查看一个物理设备是否开启了 SR-IOV 功能:

# 执行下面命令:

lspci -s 0000:01:00.1 -vvv

# 找到类似 Capabilities: [180 v1] Single Root I/O Virtualization (SR-IOV) 这一栏

# 看下面 IOVCtl: Enable-,表示这个设备没有开启 SR-IOV,同时 Number of VFs: 0 表示现在我们实际使用了 0 个 VF

# - Initial VFs: 备出厂时预分配的默认 VF 数量。这个数值通常由厂商或 BIOS 设置,表示设备在初始化时自动预留的 VF 资源数量。在用户案例中,"Initial VFs: 16"表明该设备默认预分配了 16 个 VF 资源。

# - Total VFs:表明该设备在物理层面最多可支持 16 个 VF。

# - Number of VFs:表示当前实际激活的 VF 数量,反映 VF 在软件层面被创建和激活的状态。虽然硬件已为最多 16 个 VF 预留了资源,但软件层面尚未创建任何VF,因此当前可用的 VF 数量为0。

Capabilities: [180 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy- 10BitTagReq-

IOVSta: Migration-

Initial VFs: 16, Total VFs: 16, Number of VFs: 0, Function Dependency Link: 01

VF offset: 17, stride: 1, Device ID: 101e

Supported Page Size: 000007ff, System Page Size: 00000001

Region 0: Memory at 00000600dc000000 (64-bit, prefetchable)

VF Migration: offset: 00000000, BIR: 0

# 找一个开了 SR-IOV 的作为对比(可以看到 IOVCtl: Enable+ 了表示打开了,同时 Number of VFs: 16 表示现在我们实际使用了 16 个 VF):

Capabilities: [180 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- Interrupt Message Number: 000

IOVCtl: Enable+ Migration- Interrupt- MSE+ ARIHierarchy+ 10BitTagReq-

IOVSta: Migration-

Initial VFs: 16, Total VFs: 16, Number of VFs: 16, Function Dependency Link: 00

VF offset: 2, stride: 1, Device ID: 101e

Supported Page Size: 000007ff, System Page Size: 00000001

Region 0: Memory at 00000600de000000 (64-bit, prefetchable)

VF Migration: offset: 00000000, BIR: 0

# 对于 VF,因为不支持这个 capability,所以压根就没有这一栏。