Slab in Linux

Slab: n. 厚板, 平板, 厚片。

总结:内核会临时申请一些很小的数据结构,之前用 kmalloc() 等等都是为了申请比较大的空间的,而且因为是临时的,所以频繁申请释放也影响性能,所以释放时并不是真正的释放,而是缓存起来供下一次用,这样就不用再申请了。

Motivation: Kernel modules and drivers often need to allocate temporary storage for non-persistent structures and objects, such as inodes, task structures, and device structures. These objects are uniform in size and are allocated and released many times during the life of the kernel. In earlier Unix and Linux implementations, the usual mechanisms for creating and releasing these objects were the kmalloc() and kfree() kernel calls.

However, these used an allocation scheme that was optimized for allocating and releasing pages in multiples of the hardware page size. For the small transient objects often required by the kernel and drivers, these page allocation routines were horribly inefficient, leaving the individual kernel modules and drivers responsible for optimizing their own memory usage.

Linux Memory Management: Slabs

when a slab-allocated object is released after use, the slab allocation system typically keeps it cached (rather than doing the work of destroying it) ready for re-use next time an object of that type is needed (thus avoiding the work of constructing and initialising a new object).

In Linux:

这段话说的很精炼:

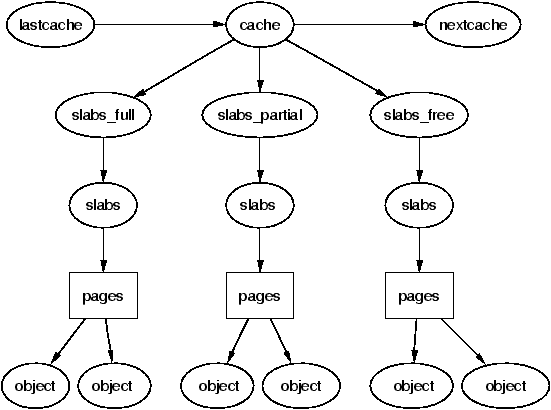

The slab allocator consists of a variable number of caches that are linked together on a doubly linked circular list called a cache chain. A cache, in the context of the slab allocator, is a manager for a number of objects of a particular type.

Each cache maintains blocks of contiguous pages in memory called slabs which are carved up into small chunks for the data structures and objects the cache manages.

[Comprehensive] Slab Allocator

在上面的这段话中,我们可以发现以下几个概念:"cache chain", "cache", "slab", "data structure" 等等。这些概念之间的关系为:

这张图有点过时了,因为 kmem_cache 不包含这三个 list 了。

cache 对应的数据结构是 struct kmem_cache。

Difference between cache and slab

关于 cache 和 slab 之间的区别,可以看这个问题:linux kernel - SLAB memory management - Stack Overflow,展开来说这个问题是:Why does each cache contain multiple 'slabs'? What differentiates each slab within a cache? Why not simply have the cache filled with the data objects themselves? Why does there need to be this extra layer?

The purpose of such an extra layer is to prevent memory fragmentation issues that could happen if the memory allocation was made in a simple and intuitive manner.

Thus, the kmem_cache object holds 3 lists of its slabs, gathered in 3 flavours :

- Empty slabs: these slabs do not contain an in-use object.

- Partial slabs: these slabs contain objects currently used but there is still memory area that can hold new objects.

- Full slabs: these slabs contains objects being used and cannot host new objects (full …).

struct kmem_cache {

When requesting an object through the slab allocator,

- It will try to get within a partial slab,

- If it cannot, it will get it from an empty slab.

Slab

A slab is a set of one or more contiguous pages. This memory is further divided into equal segments the size of the object type that the cache is managing.

How to use slab memory allocator?

kmem_cache_alloc() Kernel

void *kmem_cache_alloc(struct kmem_cache *s, gfp_t gfpflags)

{

void *ret = slab_alloc_node(s, NULL, gfpflags, NUMA_NO_NODE, _RET_IP_, s->object_size);

//...

// ret 就是这个 node 的 HVA,所以时 void* 类型的。

return ret;

}

struct kmem_cache Kernel

struct kmem_cache {

#ifndef CONFIG_SLUB_TINY

struct kmem_cache_cpu __percpu *cpu_slab;

#endif

/* Used for retrieving partial slabs, etc. */

slab_flags_t flags;

unsigned long min_partial;

unsigned int size; /* Object size including metadata */

// 这个 cache 里面每一个 object 的大小,用来分配的时候做决定

unsigned int object_size; /* Object size without metadata */

struct reciprocal_value reciprocal_size;

unsigned int offset; /* Free pointer offset */

#ifdef CONFIG_SLUB_CPU_PARTIAL

/* Number of per cpu partial objects to keep around */

unsigned int cpu_partial;

/* Number of per cpu partial slabs to keep around */

unsigned int cpu_partial_slabs;

#endif

struct kmem_cache_order_objects oo;

/* Allocation and freeing of slabs */

struct kmem_cache_order_objects min;

gfp_t allocflags; /* gfp flags to use on each alloc */

int refcount; /* Refcount for slab cache destroy */

void (*ctor)(void *object); /* Object constructor */

unsigned int inuse; /* Offset to metadata */

unsigned int align; /* Alignment */

unsigned int red_left_pad; /* Left redzone padding size */

const char *name; /* Name (only for display!) */

struct list_head list; /* List of slab caches */

#ifdef CONFIG_SYSFS

struct kobject kobj; /* For sysfs */

#endif

#ifdef CONFIG_SLAB_FREELIST_HARDENED

unsigned long random;

#endif

#ifdef CONFIG_NUMA

/*

* Defragmentation by allocating from a remote node.

*/

unsigned int remote_node_defrag_ratio;

#endif

#ifdef CONFIG_SLAB_FREELIST_RANDOM

unsigned int *random_seq;

#endif

#ifdef CONFIG_KASAN_GENERIC

struct kasan_cache kasan_info;

#endif

#ifdef CONFIG_HARDENED_USERCOPY

unsigned int useroffset; /* Usercopy region offset */

unsigned int usersize; /* Usercopy region size */

#endif

struct kmem_cache_node *node[MAX_NUMNODES];

};